Solutions

Risk Associated with Sub-Optimal Diagnostics

What is a “False Alarm”?

The definition of a False Alarm, unfortunately is dependent upon your specific perspective. In a very general sense, it is the improper reporting of a failure to the operator of the equipment or system. In addressing the universe of possibilities that could compromise the proper reporting of a failure, DSI has conquered one specific cause and the primary contributor to the experience of False Alarms, which is the “Diagnostic-Induced” False Alarms, or more simply “Diagnostic False Alarms”.

Diagnostic False Alarms

The text book example of a “Diagnostic False Alarm” is, for example, when the diagnostic equipment (usually, on-board BIT) misreports the functional status (test results) of whatever the sensor is designed to be detecting in its Test Coverage. If the sensor itself was actually failing while the “sensed” function was actually correctly functioning, then this would be a textbook case of a diagnostic-induced false alarm. This will typically result when any sensor is in diagnostic ambiguity with the function of the hardware it was supposed to be sensing. As a result, the diagnostic design was inadequate and thus unable to isolate the hardware function from the faulty sensor.

Diagnostic ambiguity is painfully obvious in the eXpress diagnostic analysis of the design. This is precisely why the Testability or Diagnostic Design analysis should be performed much earlier in the design development process – to “influence the design” for sustainment effectiveness. In fact, in the diagnostic analysis should be based on the functional design characteristics prior to parts selection. In other words, as the reliability engineering data becomes available, it can then be used to “augment” the diagnostic design. In such order it enables to perform a series of turnkey diagnostic analyses that include the new and evolving reliability data.

Reliability engineering will include the producing of such fundamental data as failure rates, failure modes, failure effects and the failure severity of any of the failure effects. But Reliability Engineering is not suited to be effective at determining how any failure is isolated from any other function in the operational or maintenance environment in support of that fielded product. This may be obvious to those who are blessed with diagnostic savvy, but unfortunately, it stands to puzzle most traditionalists that still stubbornly attempt to solve diagnostic engineering skills within the hard boundaries of reliability engineering.

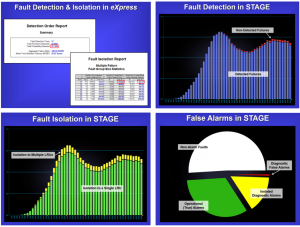

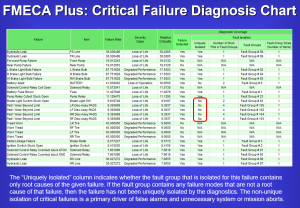

Contrarily, “Diagnostic False Alarms” are very easily depicted from within the eXpress model that is able to fully integrate diagnostic design and reliability engineering. The high-end, eXpress Diagnostic Engineering tool first provides a menu for the selection of traditional “turnkey” and “advanced” reliability assessment products. As the reliability data is included in the eXpress model, such traditional reliability products, can now begin to be augmented with unmatched diagnostic richness. The objective is not only to accommodate traditional reliability requirements, but to also extend these assessments to represent the impact of the design’s diagnostic capability. The resulting charts are as informative as they are astounding!

DSI’s eXpress rather blatantly excels in the role of capturing and integrating reliability and diagnostic data into some of its key output assessment products. This was the impetus for first establishing the “Critical Failure Diagnosis Chart” as a stock configuration that can be selected from the eXpress FMECA Plus module for precisely such endeavors.

Another, more advanced method that seamlessly leverages the combining of reliability and diagnostic design data in the producing of unprecedented sustainment data metrics and costing estimates is performed through “STAGE” (DSI’s Health Management and Operational Support Simulation application). Traditional military CDRL’s (Contract Data Requirements List) have been unable to distinguish between the Diagnostic Contribution from the overly-generic and ambiguous design data metric it has used to describe the likelihood of the occurrence of False Alarms on a fielded system. As a result of this FA metric being so misunderstood, many contractors are able to play along with its own independent set of rules for generation of what could service as the metric algorithm for that program, or simply exclude the FA by providing a variance with a justification or explanation. Regardless, this is typical of how this ambiguous FA has “not seriously worked” traditionally in the military industry.

Solution for Assessing Diagnostics FA’s

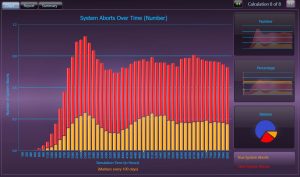

The combining and integrating of the captured expert eXpress Diagnostic Design knowledgebase with the respective reliability engineering data in the STAGE simulation, produces an objective and repeatable means to measuring the diagnostic contribution to the False Alarms expected to occur over a chosen sustainment lifecycle.

In this manner, the “diagnostic-induced” False Alarms are boldly observable in the output graphs from the STAGE simulation tool. This is the most appropriate methodology for generating such values, as the system would only experience a False Alarm during its operation. As such, the diagnostic constraints (BIT Test Coverage – Test Coverage Interference) per any operational mode are two important variables and are prerequisites in the more accurate calculation of a system’s Diagnostic False Alarms. Other contributing criteria are the expected failure rates (reliability) of the components that are interrelated in the operational mode of the system, and the impact of prior (predictive and/or corrective) maintenance performed on that system, if any.

A requirement to produce an assessment value to describe the False Alarm “rate” has traditionally been an ambiguous and elusive requirement often leading to means to circumvent accountability for performing any due diligence. The requirement, instead, could be much more specific, meaningful and hopefully, ubiquitously achievable.

The occurrence of any “Diagnostic” False Alarms “over time” is an objective, informative, measurable and manageable metric that represents the contribution of the diagnostics to the experiencing of False Alarms – over the sustainment lifecycle. The values are then produced by determining the design’s inherent diagnostic integrity and its operational effectiveness given any design constraints while considering the impact of its operational maintenance philosophy. Thus, the assessment requirement to acquire a single discreet value to represent the characterization of the metric is overly presumptuous and woefully misleading.

A False Alarm “rate”, like any metric based upon producing values using a measurement of “rate”, is more appropriately characterized by using a stochastic means, thus depicting random variables and any vicissitudes likely to occur over time.

The “Diagnostic” False Alarm “rate” is a metric that considers both the diagnostic integrity of the design, and the impact of maintaining the deployed asset during its sustainment lifecycle. The values for this metric over the sustainment lifecycle is generated by transferring the eXpress Diagnostic Design captured in eXpress to the STAGE operational support simulation environment.

STAGE inherently provides the canvas and capability to deliver in this regard through its approach benefiting from using a simulation that considers both the diagnostic integrity of the design and any evolving sustainment paradigm. As such, specific supporting detailed data regarding the triggering “root cause(s)” of the failures are also inherently capable from the STAGE simulation.

Uncertainty of Diagnostic Integrity

Although a traditional deliverable of a Testability or Reliability assessment product may describe a rosy picture of a design’s diagnostic, reliability or maintainability acumen, the actual experience in the sustainment lifecycle could be “alarmingly” opposite. For example, designs that are accepted as meeting product assessment criteria in such areas as FD/FI, FA, FSA, MTBF, MTTI, MTTR, MTBCF, MTBUM, etc., may yield disturbing and contradictory results in a diagnostic simulation.

Many discoveries occur when diagnostic constraints are considered in simulation that expose, for example, the inability to isolate between critical failure modes at lower levels of the design, as discussed above. That said, there are many inherent operational and diagnostic assumptions that are unknowingly overlooked in any traditional design-based assessment product that fails to consider the diagnostic impact upon the support of the fielded design over time.

The STAGE simulation seamlessly exposes such limitations, and hopefully early enough in design development to influence design decisions to enrich sustainment effectiveness and value.

Impact of Diagnostic Ambiguity and Maintenance Philosophy

When components are being replaced in any maintenance activity, the grouping of the design of components may excel in solving one design objective but may be a major cost driver in sustainment. This typically caused by such corrective actions that resort to replacements of non-failed components along with “presumed-to-be-failed” components due to lack of unambiguous isolation means. Diagnostic ambiguity results when the Diagnostic Integrity of the design (“net” Test Coverage) is not well defined. In eXpress, the Test Coverage can be exhaustively validated early in design development or at any time during the product lifecycle(s) and easily shared with the customer or SME’s to validate correctness and thoroughness of the diagnostic conclusions.

If the failure occurs and the diagnostic design does NOT provide the capability to isolate between multiple (lost) functions – one of which that may be a benign failure and the other failure to have a “severity” category of a “Catastrophic” Event – then the only corrective or mitigating action at the system level, is to “abort” the operation or mission. This is a common example of where the objective of “Operational Safety” takes precedence over any other operational objective.

If the Test Coverage for the on-board sensors was not vetted and validated by the diagnostic engineering activity during design development, many unknown or inadequate diagnoses will continue to randomly occur.

If the (operation, mission or system) abort was later discovered to have been caused by the benign failure, then we have a blatant example of a “False System Abort”, or FSA. These can occur with excessive uncertainty when the diagnostic integrity of the design – at the system level – is not fully considered. Such diagnostically induced System Aborts are avoidable from a diagnostic perspective.

After the system has been fielded and maintained, the opportunity for increased or decreased False Alarms or False System Aborts is undeterminable with traditional approaches to design development for complex systems, despite the expertise of advanced engineers assigned to the development effort.

A true review of FA or FSA – from a diagnostic design capability – can be performed during design development with STAGE. Many other diagnostic performance measures can be retrieved from the same simulation that may provide more detailed support to the heaviest contributors to costly or troublesome characteristics that are not otherwise apparent in traditional design assessment products.

Diagnostics Reflect Test Coverage Discipline and Prudency

If the Test Coverage definitions are “Garbage In” then the Diagnostics will be “Garbage Out”! Get the Test Coverage definitions Correct! If an organization skimps on accurately and comprehensively defining the Test Coverage, then expect sloppy and costly sustainment that only escalates over time.

As of 2018, traditional DFT, Testability and even “Advanced Diagnostic” assessment tools lack any consistent approaches towards the vetting and validating of the Test Coverage used to compute the diagnostic conclusions – for complex systems.

If any organization is still using inadequate internal or third party tools or techniques to define the Test Coverages that are not able to be consistently and thoroughly vetted or validated by and within a feature of the same diagnostic tool, then this activity may be an added cost to the design development activity – initially. It’s only an added cost because it should have already been a standard design development function. But this cost is reversible and will morph into a significant cost-cutting measure and an added value activity!

Once the complex, large or critical design is captured in the eXpress modeling environment, comprehensive Test Coverage definitions can be entirely captured. This will ensure that the continued diagnostic assessments will reflect any of these diagnostic design constraints – no matter how extensive or subtle these constraints may seem. At that point, extensive diagnostic-informed evaluations and simulated operational support assessments can be performed that will reflect any of those diagnostic design strengths or weaknesses.

Attaining Test Coverage certainty is achievable and the single nastiest culprit between deploying an incredibly diagnostically-reliable asset or fielding another asset ridden with costly and unknown diagnostically-constrained operational and sustainment liabilities.

Related Links

Influence the Design for DFT

Influence the IVHM Design for Enhanced Sustainment Capabilities

Reduce or Eliminate NFF’s, CND’s and RTOK’s

Related Videos

Diagnostic Validation through Fault Insertion

STAGE Operational Support Simulation – LIVE Demonstration

COTS-Based Solution for Through-Life Support