Solutions

Advanced Testability and Diagnostic Metrics

Calculation of Advance Metrics for Defense/Aerospace

As the eXpress models are integrated into the system, the design must be able to detect the failures within the context of an integrated diagnostic model, which is a woeful inadequacy of most every other testability analysis. As the models are integrated more “test” constraints become apparent due to the integrated system posing new testing accessibility restrictions. This alone, may significantly degrade the usability of test points that were previously accessible when the design is independent from the other integrated designs.

Interpreting “Test Coverage”

Traditional approaches to assessing “test coverage” are inconsistent and frequently depend upon the processes adopted by the manufacturer or designer. As such, their independent processes generally prioritize their objectives as constrained by their respective resources and as may be inconsistently required by their immediate customer(s). Since there is not a single approach or metric value that is universally applicable for every design, interpreting the “Test Coverage” as supplied by the manufacturer for any design must be examined on an individual basis.

Even if the manufacturer can demonstrate or “prove” that their independent method(s) or processes are sufficient, customary or accurate, the buyer must perform its due diligence to improve its understanding of any delivered test coverage assessment or product as well as the discipline of Testability. Computations can abide by any legislated document, standard or guideline, but the resultant and meaningful metric resides with the requirements of the buyer or system integrator.

Because industry does not impose a single applicable approach and a universal reliable value for any particular metric that must be considered in the computation of test coverage or Testability within the deliverable assessment product(s), the buyer or system integrator can evaluate the test coverage at the next level of design architecture. This evaluation is a core competency by the simple process of capturing the functional design in the eXpress diagnostic engineering environment. But to perform any meaningful test coverage assessment, we must always consider the context of any other interdependent designs as may be involved in the broader integrated system – from an inherent and operational perspective. And as such, we must consider any input from any other design disciplines, and most specifically, maintainability and reliability engineering.

For larger, critical and more complex systems, all of the RAMS activities need to be engaged when attempting to calculate ”

Input from Reliability Engineering

Reliability Engineering declares if a function or failure is “detectable” in such assessment products as the “FMECA”. Such a declaration may prove to be true for an independent design, but when the design is integrated with many other designs in the fielded product, there is absolutely no certainty that any function or failure will be detected in the deployed asset. This is not a core capability of Reliability Engineering as the discipline does not concern itself with operational and deployed test coverage, fault groups or diagnostic effectiveness.

As a result, and while the assessment products produced by Reliability Engineering are diagnostically incoherent, reusing the data artifacts available from Reliability Engineering is a huge win in ISDD. Instead, the FMECA should also identify the Test and Diagnostic Coverage, as is a core output from the eXpress FMECA Plus “Critical Failure Diagnosis Chart“.

Input from Maintainability Engineering

Traditional Reliability assessment products dismiss the inclusion of the observability of the failure(s) at various levels of the design hierarchy. True, a FMECA will identify the failure effects at the next higher design level, but the discipline has no way of accurately assuring that those failure effects are sensed or observed within any deployed testing paradigm. If this information was available during design development, then it could benefit maintainability analyses early enough to be leveraged this into efforts such as its “Level of Repair Analysis”, or LORA. Instead, however, this is a duty that is effectively outside the scope and expertise of either design discipline, yet that doesn’t have to be. This knowledge can be very simply provided instantly to both Reliability and Maintainability Engineering, as this information is fully transparent and timely, with ISDD.

Input from Safety Analyses

Even the System level Safety Analyses (i.e. FTA’s or Event Trees) are not precise at accurately determining the root cause or primary failure(s) at the lowest functional level. These analyses stay at the higher levels of the design to assess the criticality of combining of lower-level failure causes, but not at any specified or consistent level of the integrated systems’ design. Such an endeavor is much more of a Diagnostic Engineering specialty. However, the Test and Diagnostic Coverage is essential and as such, the eXpress FTA is an integrated “turnkey” output, while the eXpress Diagnostic Reports can deliver a synchronized message in an assortment of interrelated reports, one of which is below:

Input from Testability and Diagnostics Engineering

As a prerequisite of considering the lowest levels of the design, the high-end diagnostics engineering (i.e. eXpress) must exploit the specific details of the “Test Coverage”. The functional or failure propagation “covered” by the test (i.e. BIT or any interrogation access point that can be used to measure diagnostic status) will need to start by considering the various operational states of the fielded product. This is due to the likelihood that the Test Coverage may be more constrained in some operational modes than it may be in other operational modes.

Determining Test Coverage can be messy, particularly when the design is a collection of integrated independent designs, which is the real world for many advanced programs. As such, this can get very complex for those not equipped with the diagnostic savvy, tool or approach that is integral in performing this task in a thorough, timely and consistent manner. And this is the initial logic used to accuse Reliability assessment products as tools if the objective is to achieve success and effectiveness for diagnostic or troubleshooting endeavors.

Leveraging: Reliability-Informed Diagnostic Assessments

Once the eXpress Model has imported all of the relevant data from Reliability and Maintainability Engineering, the Testability assessments can now be augmented with failure criticality, probability of occurrence and a host of other factors that typically impact the “diagnostic effectiveness” during any of the sustainment paradigms.

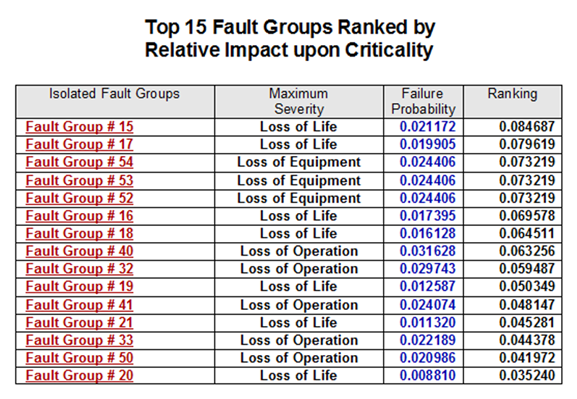

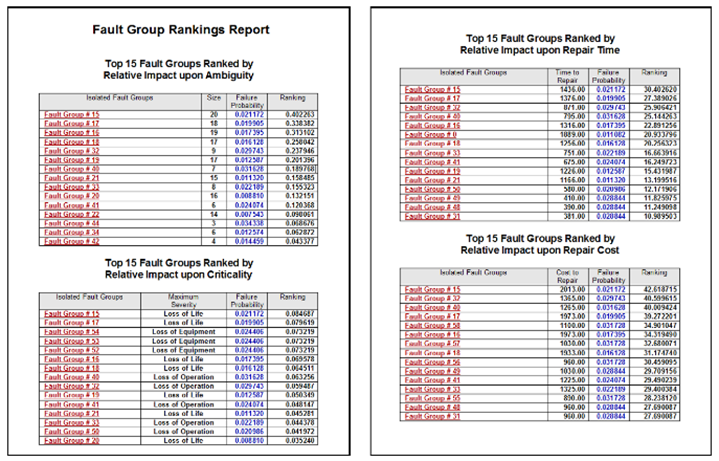

The fault Group Rankings Report provides several lists of isolated fault groups, each list ranked using different criteria. By identifying which fault groups are responsible for inflated ambiguity, excessive time-to-repair, etc., this report can be extremely valuable to analysts looking to improve a diagnostic design. So if, for instance, the analyst was interested in identifying the areas of the diagnostic design that most impact Inherent Availability, they could review the fault groups that appear near the top of the table ranked by “Relative Impact upon Repair Time.” These would be the fault groups that contribute most to the system Mean Time to Repair (the ranking for each fault group in this section is calculated as the time to repair that group, multiplied by the relative failure probability of that group).

ISDD is the most sensible approach to designing for sustainment, but during the design development lifecycle. ISDD enables the contribution of each and every design and support discipline to be a contributor and a benefactor of designing for truly, diagnostic effectiveness. To design for diagnostic effectiveness, the diagnostic integrity of the design must be able to be evaluated beyond the simplicities of inherent means. The evaluations must be performed and consider a myriad of assessments that address a variety of criterion.

Testability is a Roll-Player – Diagnostic Engineering Avails the Venue

With ISDD you can determine the test coverage and the diagnostic integrity of the design with alternative priorities or operational constraints. These priorities can be characterized in the diagnostic model by simply adjusting the values used for weightings and properties attributes on the components identified in the eXpress model(s). The diagnostics in eXpress will generate extensive and comprehensive reports for any desired system’s assessment by using the “Diagnostic Study” facility.

So, here is where we learn the difference between “an optimized diagnostic approach” and “the most effective diagnostic approach”, given a set of dynamic criterion and discovered constraints of operational Test Coverage.

How we interpret the Test Coverage for any delivered design needs to be assessed in the terms of its integration into the deployed asset and throughout the entire Product Lifecycle (Design Development through Sustainment) and as constrained by the boundaries afforded during design development. Below are just a few assessments that are totally dependent upon Test Coverage, despite investments made into any other RAMS activities:

- Safety versus Availability

- Time to Mediate and Impact on diagnostic strategy, number of Fault Groups, Fault Group Size

- Time to Repair and Impact on diagnostic strategy, number of Fault Groups, Fault Group Size

- Availability (Mission Readiness) vs. Cost

- Mission Assurance versus Cost

- Mission Readiness vs. Mission Assurance

- …and many more!

Advanced Testability – Metrics

- Fault Group Reports based upon many on the slicing and dicing inside eXpress (by entity, customer, subsystem, etc.)

- eXpress Reports which show Failure Rate & Time impact Prioritization, etc.

- yes, …..and many more!

Advanced Testability – Interoperability

- DFT to Integrated Testability

- Hard Stops of DFT

- …and much more!

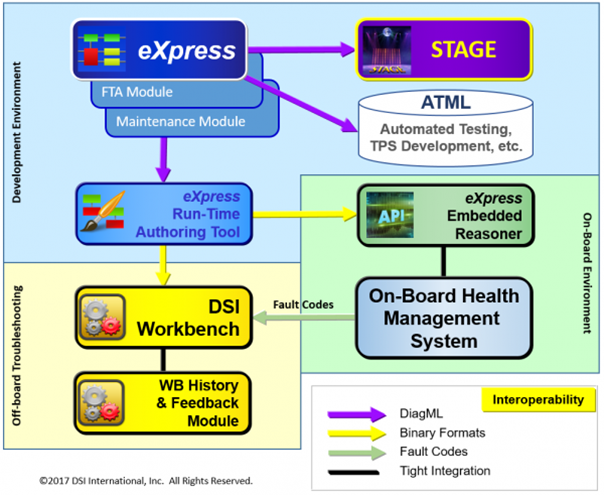

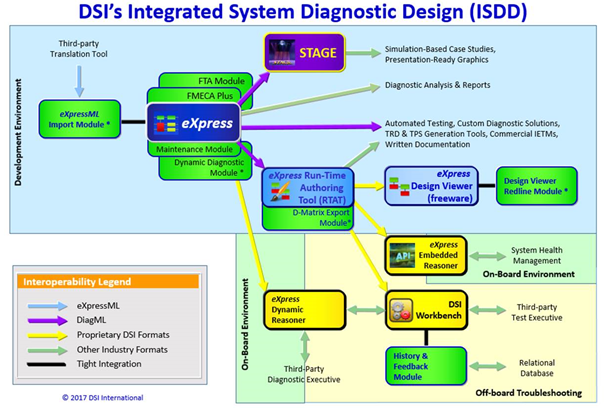

To date, DSI’s ISDD is accomplishing more than IEEE 1522 had ever envisioned. Going further, ISDD will be reusing outputs from high-end diagnostic assessment created in eXpress to facilitate advanced Diagnostic Reasoning technologies, while simultaneously, including robust gateways in support of the diagnostics and logistic support aspirations preliminarily documented in a few other anticipated flavors of ATML. Being an industry leader, DSI remains committed to bring diagnostic savvy throughout the vision of “product lifecycle”.

Any complex, large or critical system program ought to be aligned with a process that begins with the reality that the design will experience failures and “require” some continued level of maintenance as a prerequisite of servicing an important objective. The metrics described in the Testability Standards are an ideal place to start gaining appreciation for the role that both Testability and Diagnosability are to indulge. Every time a BIT failure or error code lights up, consider that as a constant reminder that Diagnostic Engineering is an implied “Design Requirement” and is truly the only design or support activity that is engaged throughout the entire product lifecycle. Yes, ALWAYS a REQUIREMENT!

Related Links:

Design For Test (DFT) vs. Design For Testability (DFT)

Diagnostic Assessment of BIT and Sensors

MIL-STD 2165

Related Videos:

Testability Report: FD/FI by Category

Testability Report: Lambda Search Options